In this short tutorial, we are going to run through how to create your scraping recipes and use these inside a workflow.

Scraping recipes enable you to create your own bots to scrape data from any website via a point-and-click browser.

There are various ways of using scraping recipes:

-You can create a scraping recipe based on a single search result or category page to automatically capture all the listing names and URLs (paginating through all the pages available).

-Or you can provide a list of product or listing page URLs (using the data input automation) and for each URL scrape specific fields (keep in mind all pages need to share the same HTML template).

-You can also use scraping recipe templates to make the process faster and retrieve the required data without creating the recipes on your own.

In this tutorial, we will cover both scenarios and show you how you can combine both approaches to scrape a wide range of different websites.

How to create scraping recipes to capture a list of products or listings URLS

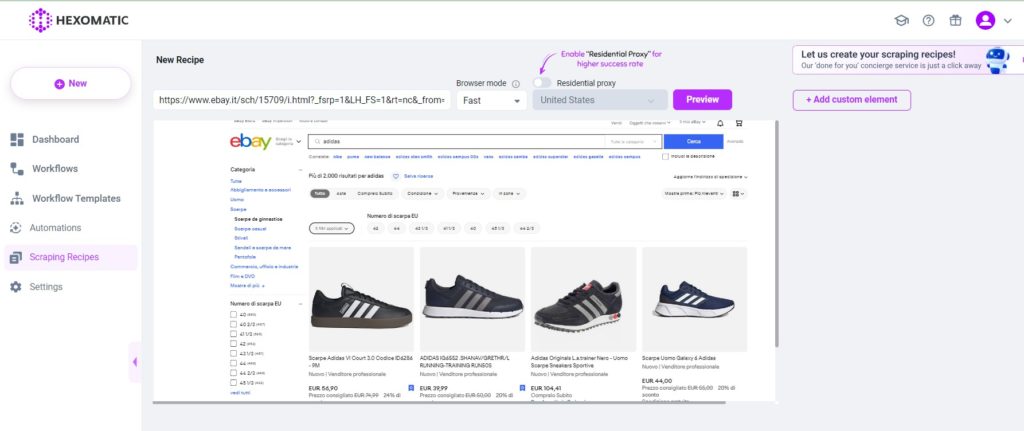

To get started go to the website you want to scrape and find the search result page that shows all the listings or products you want to capture. We recommend setting the maximum number of products per page if there is such an option then copy the URL from your browser.

Then head over to Hexomatic and create a scraping recipe by clicking on the “+” button, and then choosing the blank scraping recipe option.

Next, use the URL you captured and click preview.

Once the page has loaded click on any element you want to capture.

Then choose whether you want to select that specific element only or Hexomatic can select all the matching elements found on the page. In this case, use the “select all” option to get all the product names and product destination URLs.

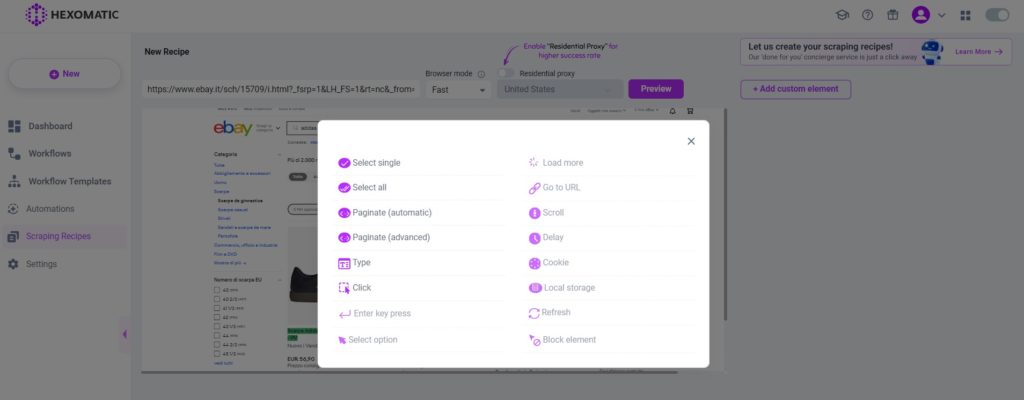

Next label your field, for example, “Product name” and choose the type of data you want to capture from the field. For example:

- -Text

- -Numbers

- -Link URLS

- -Source URLS (for example image URLs)

- -HTML tags

- -Email addresses

- -Phone numbers

- -Dates

You can specify as many fields to capture as needed on the page, but in this scenario, we are interested in getting the product names and URLs.

If your page contains products or listings over more than one page, find the pagination options from the menu.

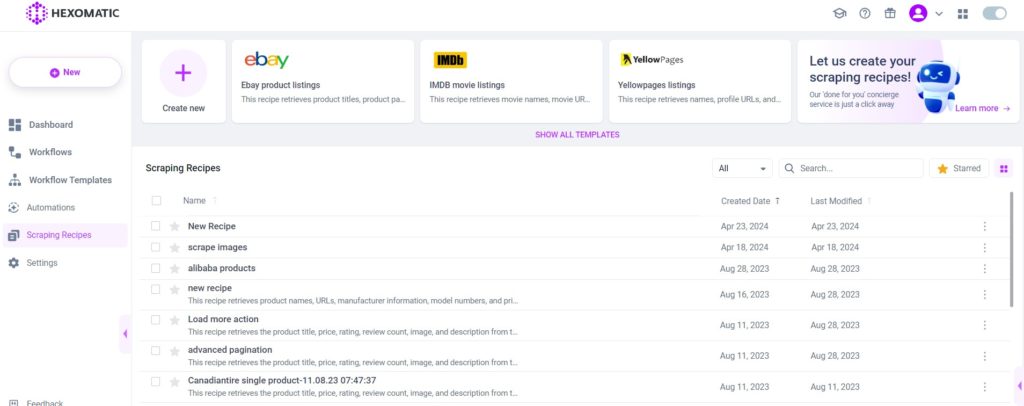

Lastly, name your recipe at the top of the page and click save.

Your recipe will be saved in the scraping recipes section.

To run your scraping recipes, simply use it in a new workflow. Workflows also enable you to chain additional scraping recipes or automations as well as specify whether to run this one time or with a recurring schedule.

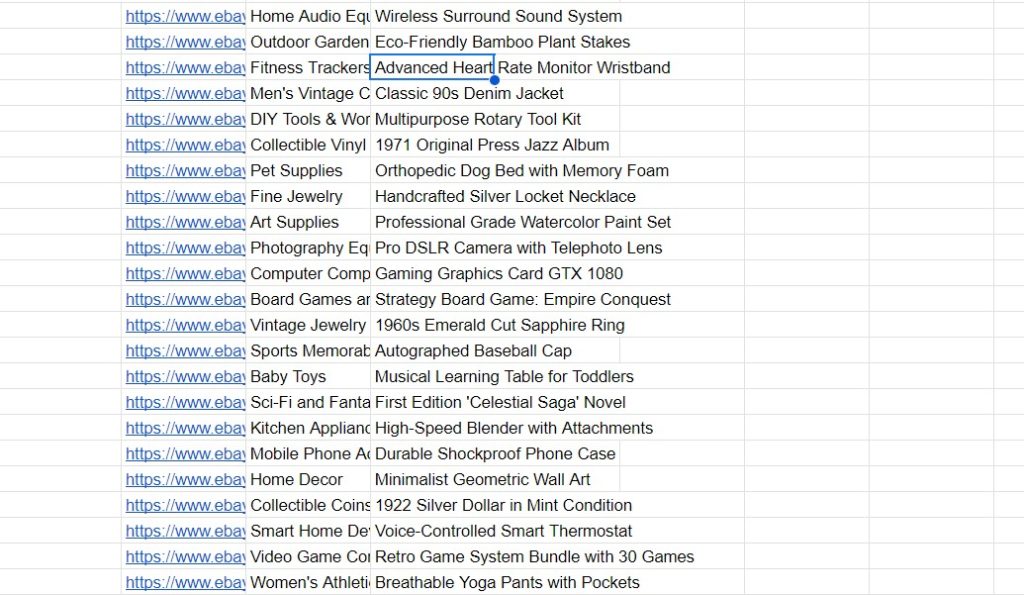

When the workflow has been completed, you will be able to download the result in your preferred file format.

How to create scraping recipes to capture specific fields from single listing or product pages

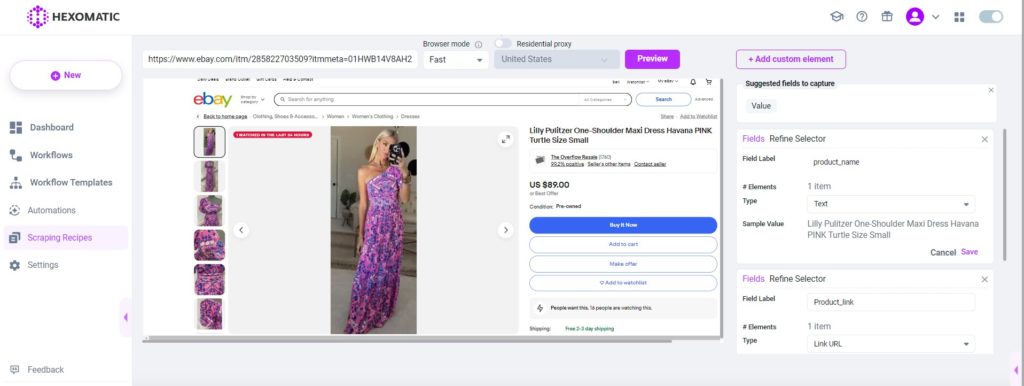

In this scenario, we already have a list of product or listing URLs that all share the same html template and we want to capture the product or listing details for each.

To get started go to one of the single listing or product pages with your browser and copy the URL.

Create a scraping recipe by clicking on the “+” button, and then choosing the blank scraping recipe option.

Next, use the URL you captured and click preview.

Then click on each of the elements you want to capture, choosing the “Select Single” option each time. For example, you could capture the:

-Product title

-Product description

-Product image

-Product price

Etc…

Label each field and choose whether you want to capture text, numbers, source URLs (for image URLs), destination URLs, phone numbers, email addresses, or dates.

Next name your recipe and click “Save”.

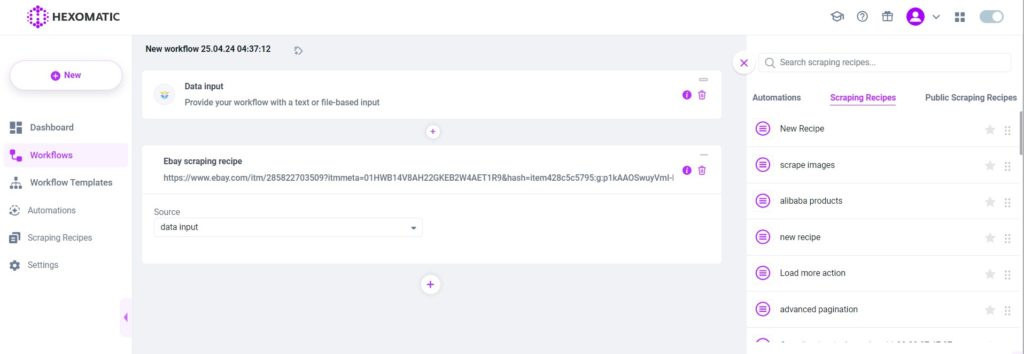

To run your scraping recipe, create a new workflow for it. Then you have two ways to run this:

1/ Using the “Data input” automation and copy-pasting the list of URLs you generated in the previous scraping recipe. Then chaining the scraping recipe to fetch the product details (specifying data input as the source of the scraping recipe).

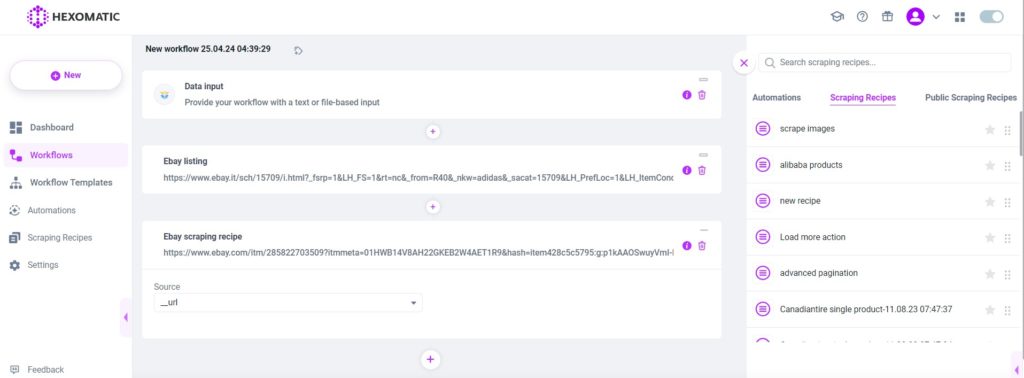

2/ Chaining together both scraping recipes one after the other

To do this simply create a new workflow and first add the scraping recipe that fetches the listing/product URLs.

Then add the scraping recipe that fetches the product/listing details specifying the URL field as the source.

Next, save the recipe name, and choose whether to run now or schedule a recurring task.

When the workflow has been completed, you will be able to download the result in your preferred file format.

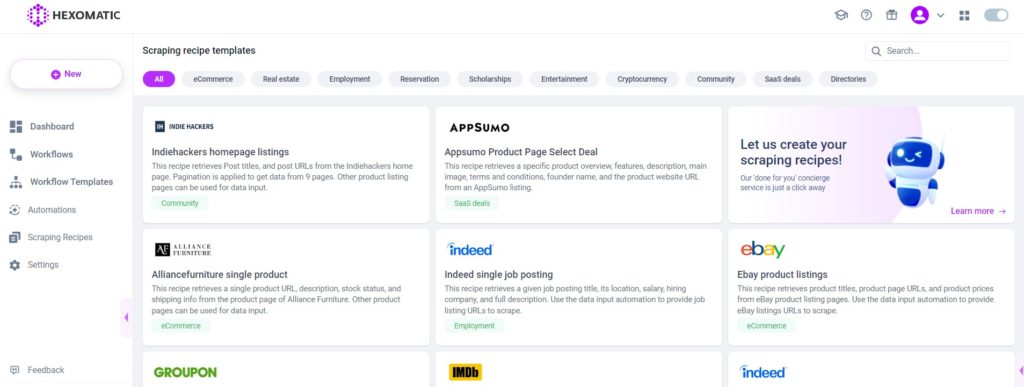

Use scraping recipe templates to retrieve data faster

The great thing about Hexomatic is that you don’t always have to create a scraping recipe to retrieve data. We have created scraping recipe templates for you to save you time.

Simply go to the scraping recipe templates and choose the one you need. Then click on the “Use in a workflow” button and you’ll start a new workflow with that recipe.

You can also edit the existing templates by adding or removing specific elements.

Automate & scale time-consuming tasks like never before

CMO & Co-founder

Helping entrepreneurs automate and scale via growth hacking strategies.

Follow me on Twitter for life behind the scenes and my best learnings in the world of SaaS.