In this short tutorial, we are going to run through how Hexomatic works and core concepts to keep in mind to run your workflows.

Hexomatic consists of three core elements: Scraping recipes, automation, and workflows.

The key point to remember is that everything in Hexomatic is run inside a workflow, and this is where you add scraping recipes or automations and decide the output and scheduling of your workflow.

Let’s look into each core element in more detail:

1/ Workflows

– Get started fast with ready-made workflows

Workflows are a combination of automation and scraping recipes where you get the output and schedule the workflow. It is where you can run scraping recipes and automation.

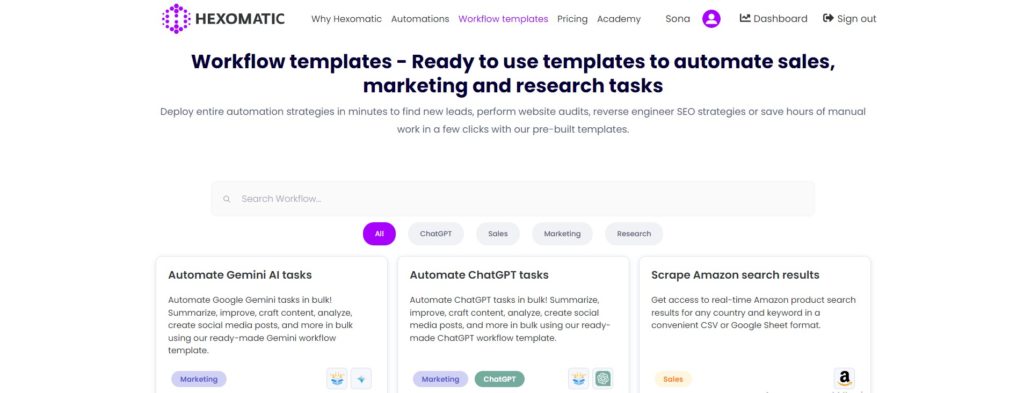

Hexomatic offers ready-made workflow templates that you can use to complete various tasks. Simply go to the Workflow Templates section of the website and choose the template you need. These ready-made workflows are created to automate tasks and scrape information related to sales, marketing, research, and ChatGPT.

With the help of workflow templates, you can complete tasks, such as:

- – Automate ChatGPT tasks in bulk

- – Scrape Amazon search results

- – Scrape phone numbers from a list of URLs

- – Categorize a list of website URLs

- – Extract all the URLs from an XML sitemap

- – Find SEO guest post opportunities

- – Discover Influencers’ contact details

- – Carry out market analyses

Browse all the workflow templates, upload your info, and run the workflow in minutes!

– Create your own workflow by combining automation and scraping recipes

The best way to visualize how workflows work is to picture a spreadsheet with columns and rows. When you run a scraping recipe for example you are essentially populating the spreadsheet with rows of structured data you have captured from web pages.

Then when you add automations in your workflow it will run that automation for each row of the spreadsheet based on its input. For example, if you want to detect social media links for each page, you can run that automation on the URL field.

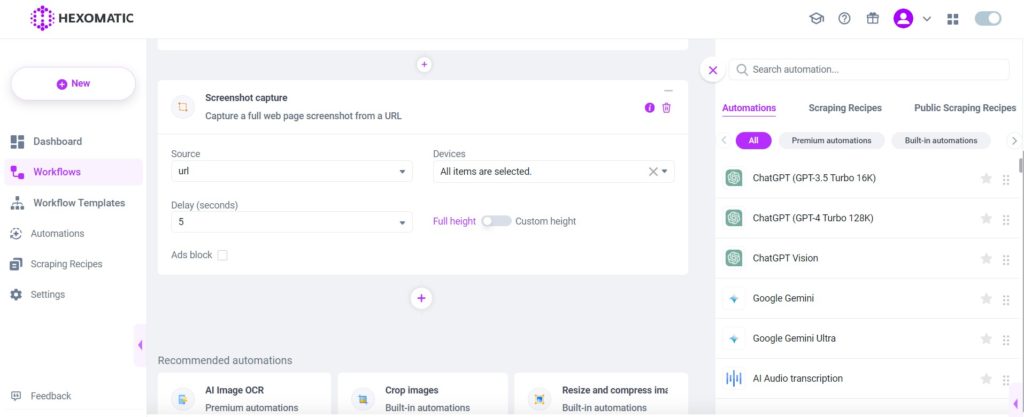

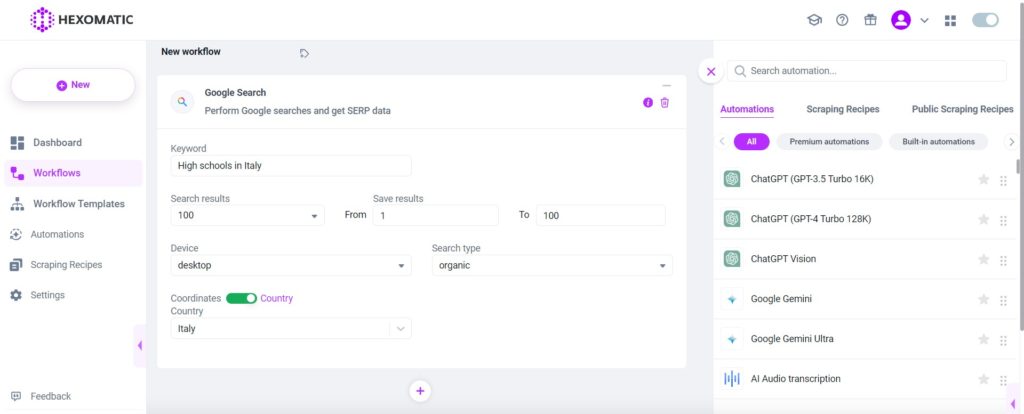

When you create a workflow, you choose the name and drag & drop the scraping recipe or automations you would like to run. For each, you will need to specify an input field to run the automation or scraping recipe from.

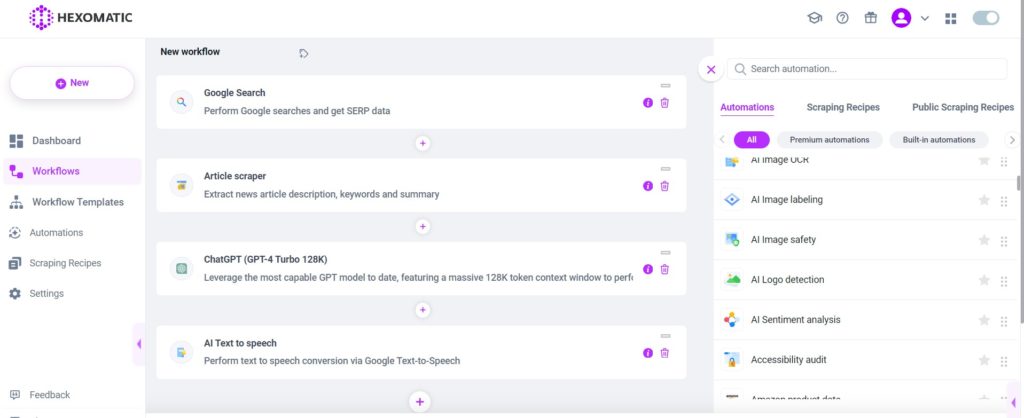

The great thing about workflows is that you can chain together as many scraping recipes or automations as you need.

Then when you click continue you will be able to choose whether to run the workflow one time manually or if you would like to run it on a recurring schedule (for example weekly).

You can find all your workflows and their statuses in the “Workflows” section. When a workflow has run successfully, you can then download the results in your preferred file format.

If a workflow has failed you can find out more by clicking on the workflow and checking the log to debug the issue.

2/ Automations

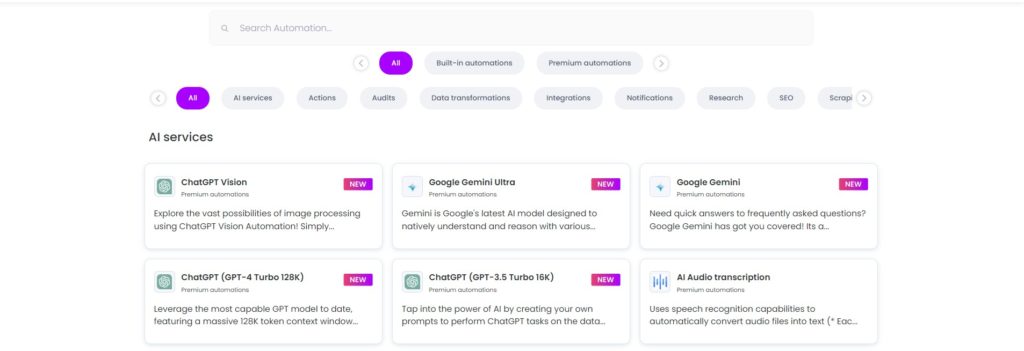

Automations on the other hand are pre-built and enable you to perform actions inside a workflow, for example fetching search results from Google, detecting social media or email contact details, transforming text, dates, or numbers, performing translations, etc.

You can find out more about each available automation in the “Automations” section. To run any automation, simply create a workflow and drag and drop the automations you would like to use.

– Get started fast using single automation

Go to the Automations section and choose the automation that you want to use. Click on the “Create Workflow” button and fill in the required information.

Then, run the workflow or schedule it for your convenience.

– Perform advanced tasks by combining multiple automation

To carry out advanced tasks, you can also use more than 2 automation in the same workflow. Hexomatic will perform different tasks at the same time saving your time and energy.

Simply choose the automation you need from the right side of the screen and drag and drop it inside your workflow.

See our automation tutorials for more information about how each automation works.

3/ Scraping recipes

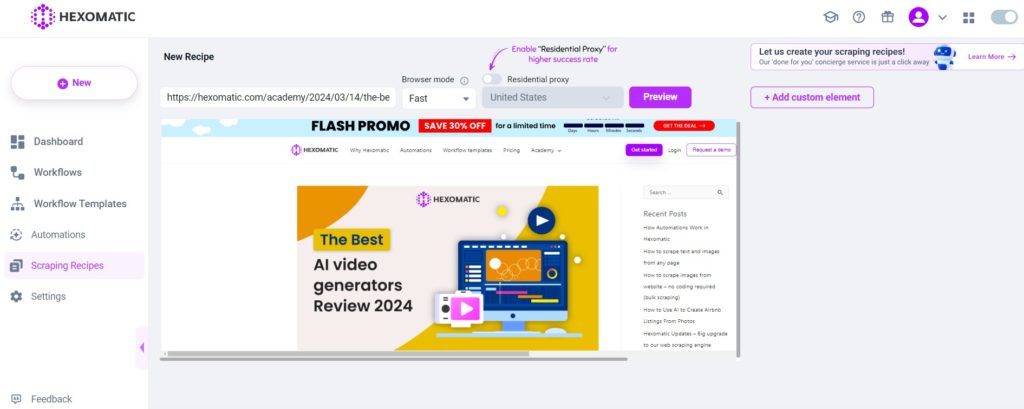

Scraping recipes enable you to create your own bots to scrape data from any website via a point-and-click browser.

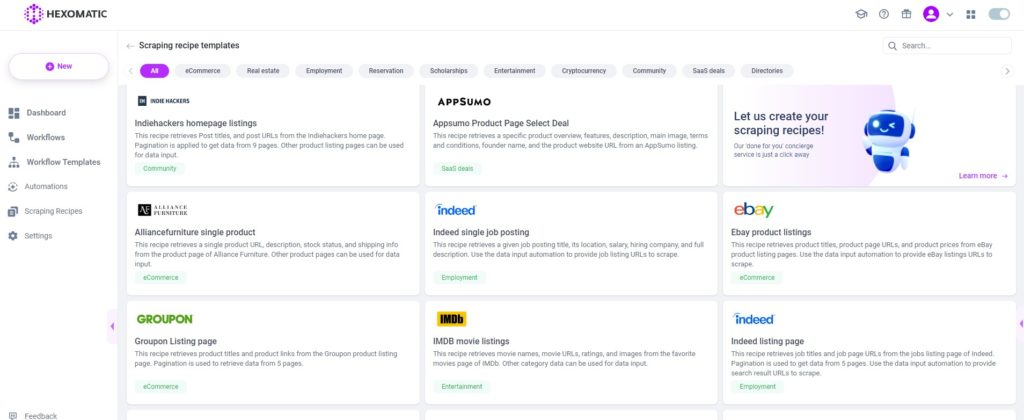

– Get started fast using our scraping recipe templates

To make it easier for you to use scraping recipes, Hexomatic offers scraping recipe templates. The latter allow anyone to scrape popular web pages, product pages, and listings.

Browse the page of scraping recipe templates and use them in a workflow by adding the required information.

– Get data from any website by creating your own scraping recipes

There are two ways of creating scraping recipes:

-You can create a scraping recipe based on a single search result or category page to automatically capture all the listing names and URLs (paginating through all the pages available).

-Or you can provide a list of product or listing page URLs (using the data input automation) and for each URL scrape specific fields (keep in mind all pages need to share the same HTML template).

Scraping recipes enable you to capture the following elements from a page:

- -Text

- -Numbers

- -Link URLS

- -Source URLS (for example image URLs)

- -HTML tags

- -Email addresses

- -Phone numbers

- -Dates

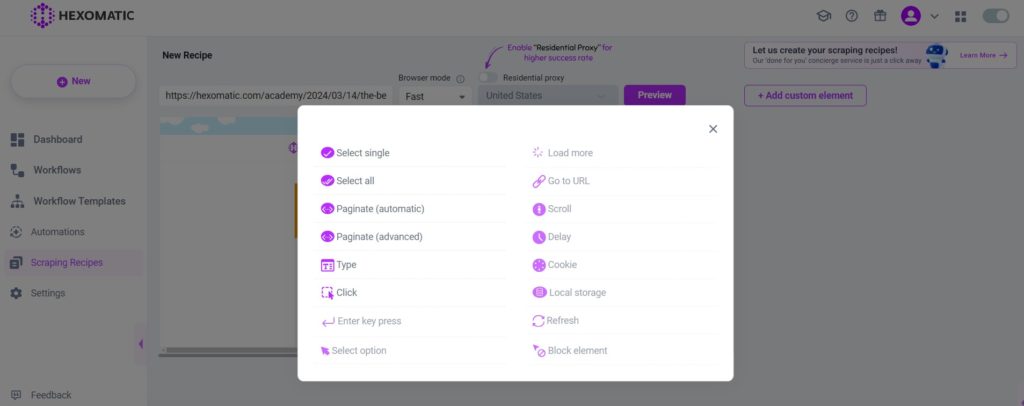

To get started simply load a page and then click on any element. Hexomatic then enables you to:

-Click

-Select that specific element

-Select all matching elements on the page (for example all the product titles or URLs) and many more.

See our Scraping recipe tutorial for more information.

To run your scraping recipes, simply create a new workflow and drag and drop your scraping recipe.

Automate & scale time-consuming tasks like never before

CMO & Co-founder

Helping entrepreneurs automate and scale via growth hacking strategies.

Follow me on Twitter for life behind the scenes and my best learnings in the world of SaaS.