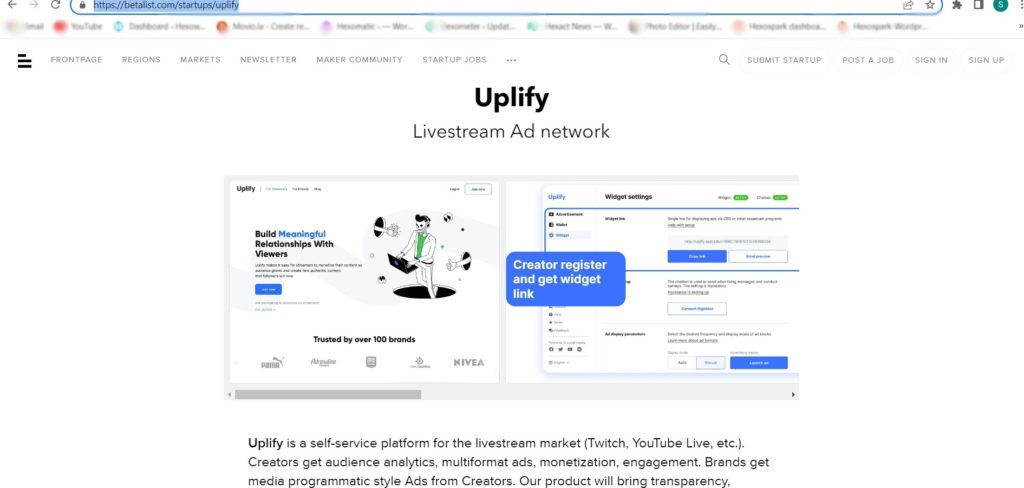

Betalist is a website that helps startups get exposure and grow their business.

It’s a platform for launching new products and services, and it’s also a great way to connect with potential customers and get feedback. The site has community entrepreneurs, and it’s a great place to learn about new startups and products.

By scraping data from BetaList, you can perform competitor research, market analysis, reveal trends, and more.

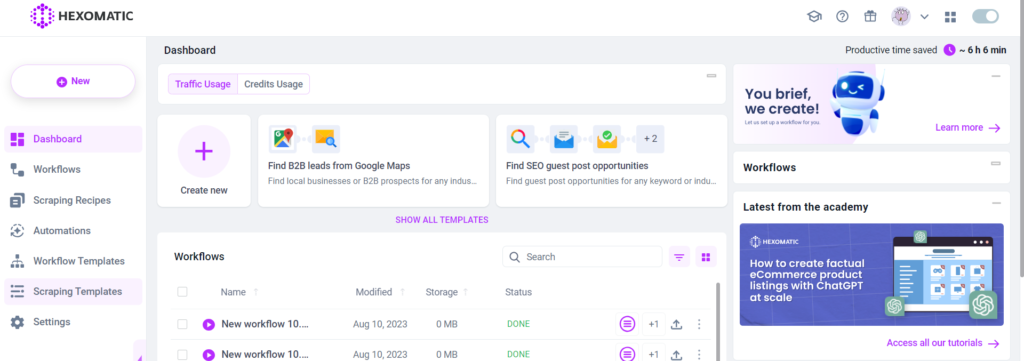

This tutorial will show you how to perform 1-click scraping of BetaList startup listings with the public scraping library of Hexomatic. In particular, you will find out:

#1 How to scrape the BetaList homepage

#2 How to scrape category listings from BetaList.

#3 How to scrape single product data from BetaList.

#1 How to scrape the BetaList homepage

In this section, you will see how to scrape Homepage of BetaList to get fresh startup data daily.

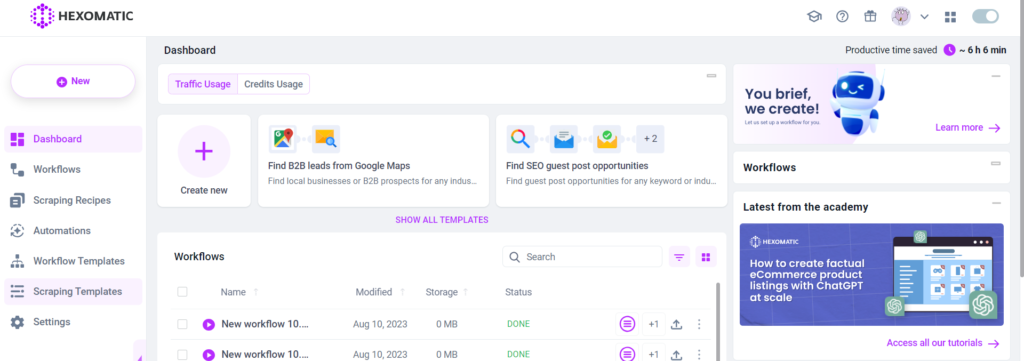

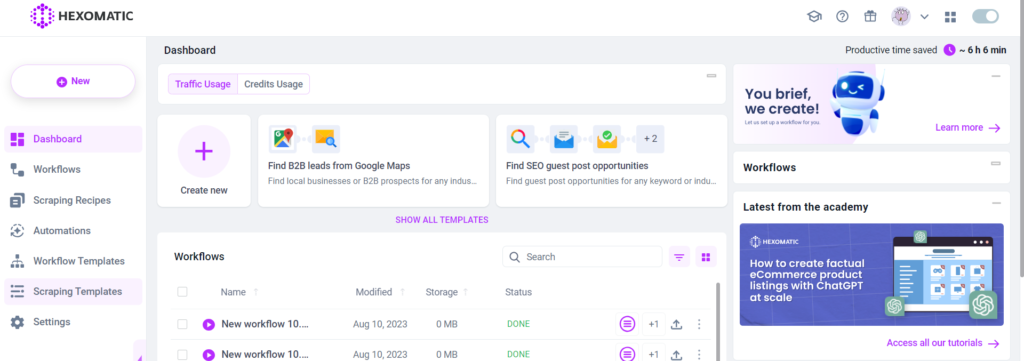

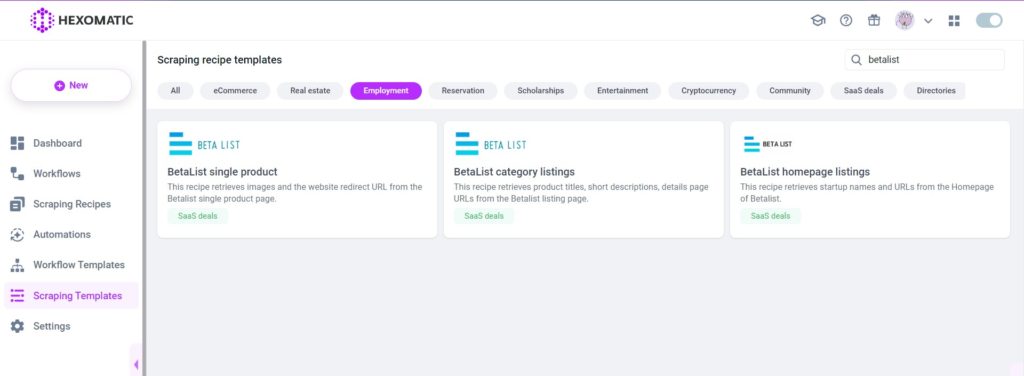

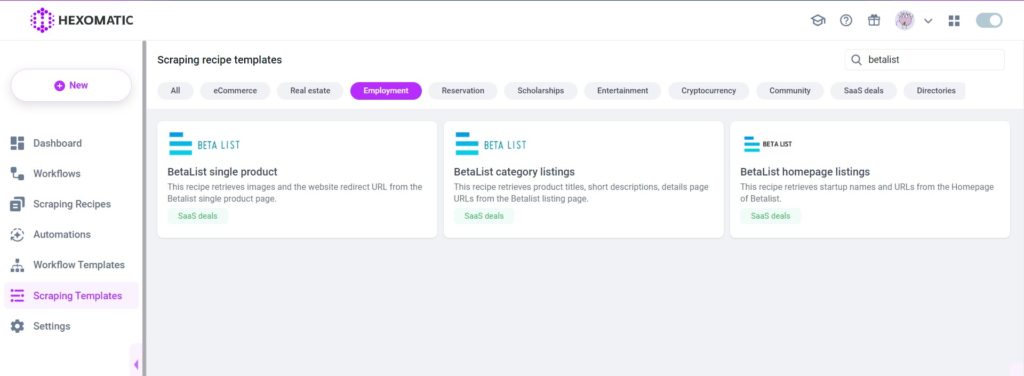

Step 1: Go to the Library of Scraping Templates

From your dashboard, select Scraping Templates to access the public scraping recipes.

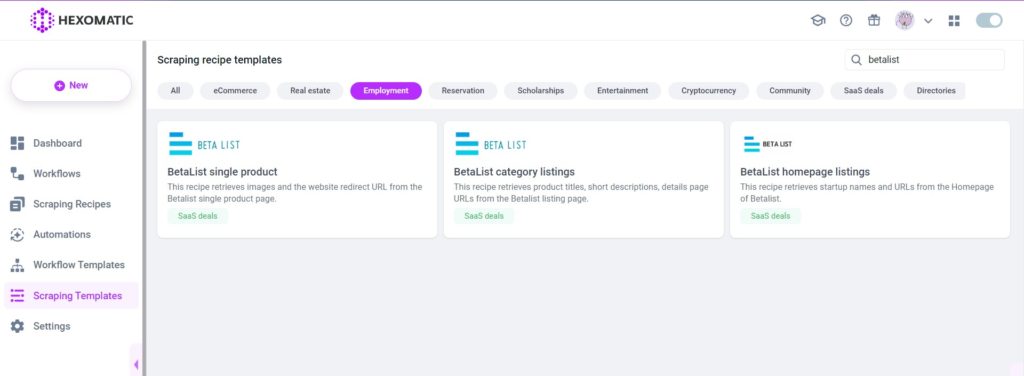

Step 2: Run the Betalist “Homepage listings” recipe

From the list of public scraping recipes, select the BetaList “Homepage listings” recipe, then, click “Use in a workflow” option.

After selecting the “Use in a workflow” option, click Continue.

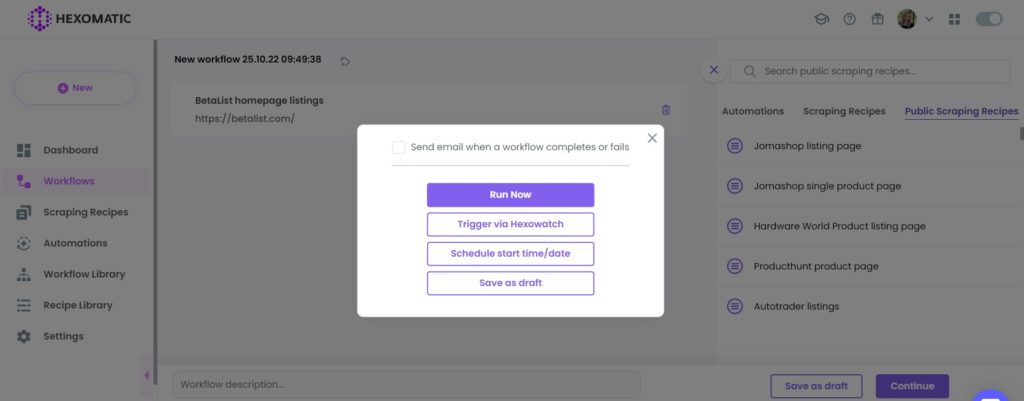

Step 3: Run the Workflow

Now, you can run the workflow to get the results.

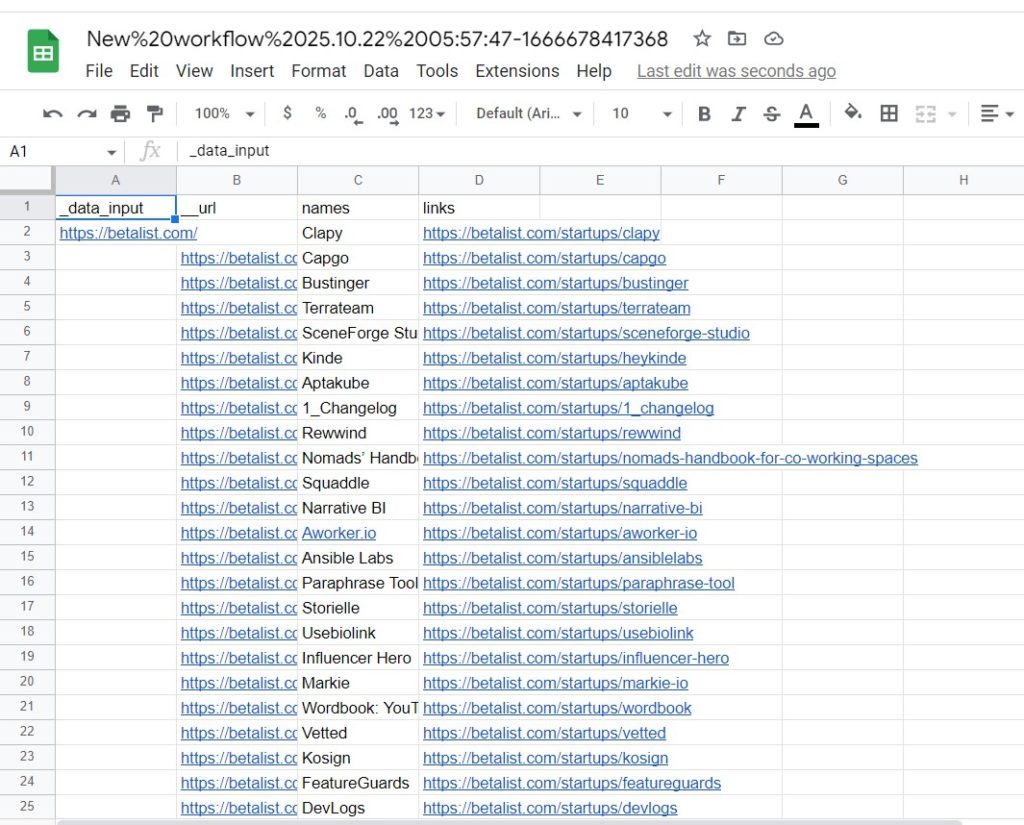

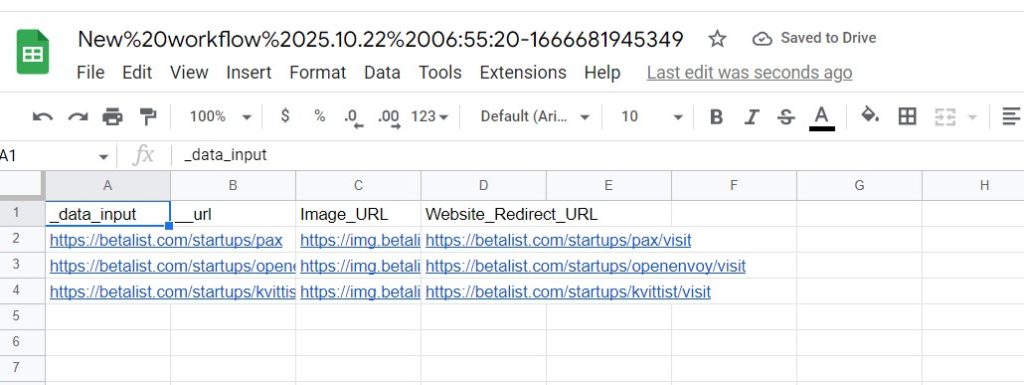

Step 4: View and Save the results

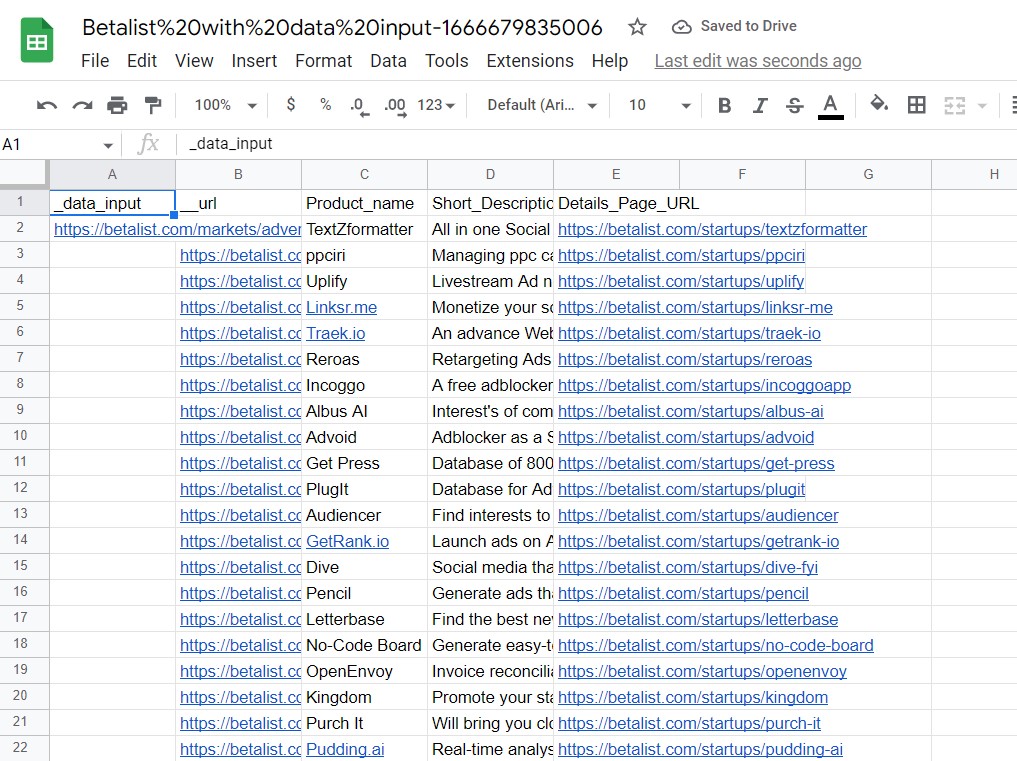

Once the workflow has finished running, you can view the results and export them to CSV or Google Sheets.

#2 How to scrape category listings from BetaList

In our Scraping Recipe Library, you can also find specific category listings. You can use this recipe to scrape any other category data from Betalist. Let’s see how to do that.

Step 1: Go to the Library of Scraping Templates

From your dashboard, select Scraping Templates to access the public scraping recipes.

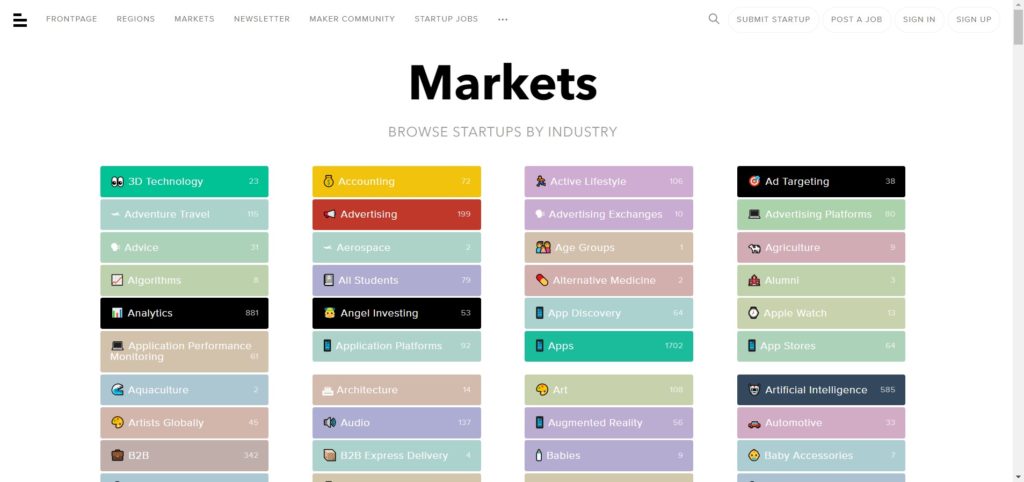

Step 2: Capture the desired Betalist category page URL(s)

Go to https://betalist.com/ and select your desired categories from the “Markets” section.

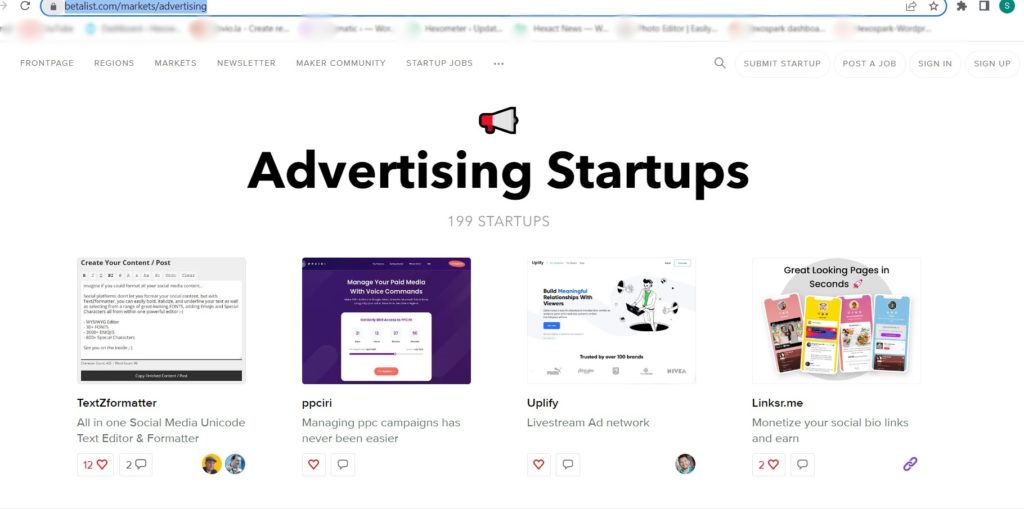

Click on any category and capture the page URL(s). You can select both a single page or a list of pages.

For example,

Step 3: Select the BetaList category listings recipe

From the recipes library, choose the BetaList category listings and click “Use in a workflow”.

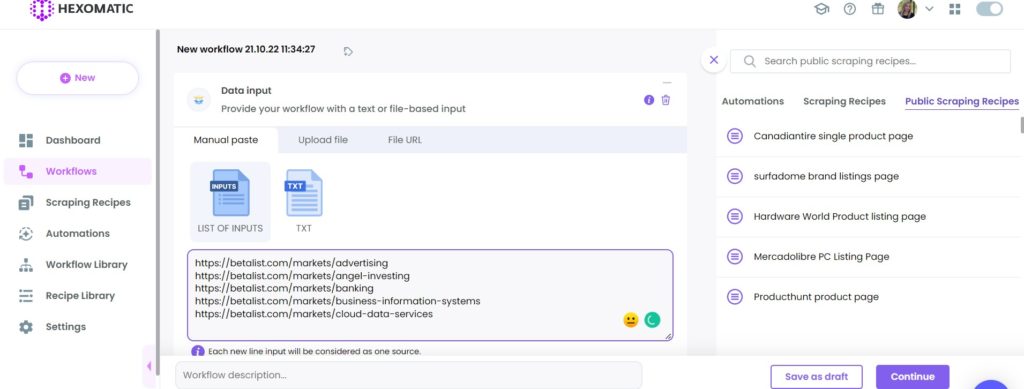

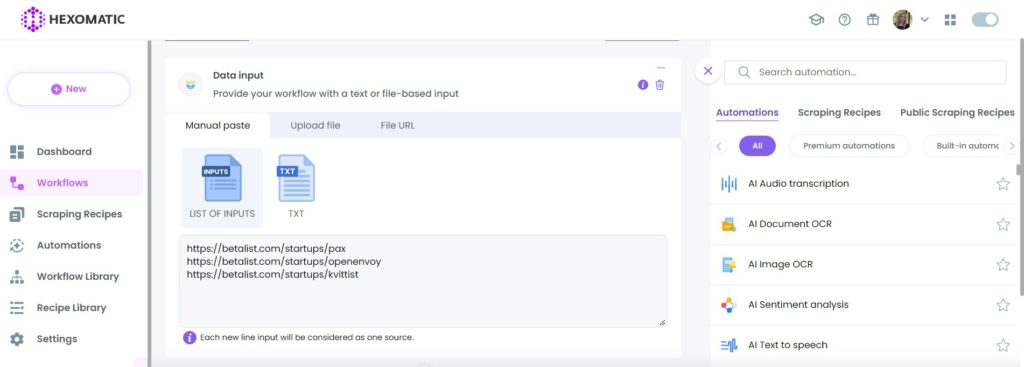

Step 4: Add captured category page URL(s)

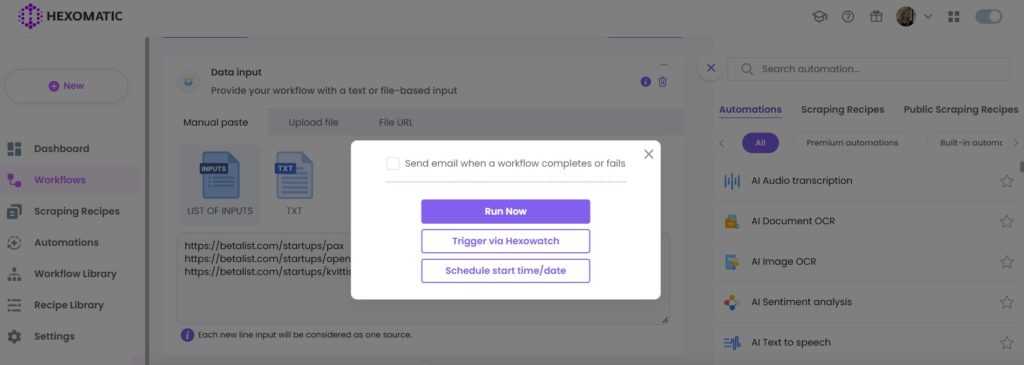

Once the workflow is created, choose the Data Input automation and add the previously captured page URL(s) using the Manual paste/list of inputs option. You can add a single URL or bulk URLs.

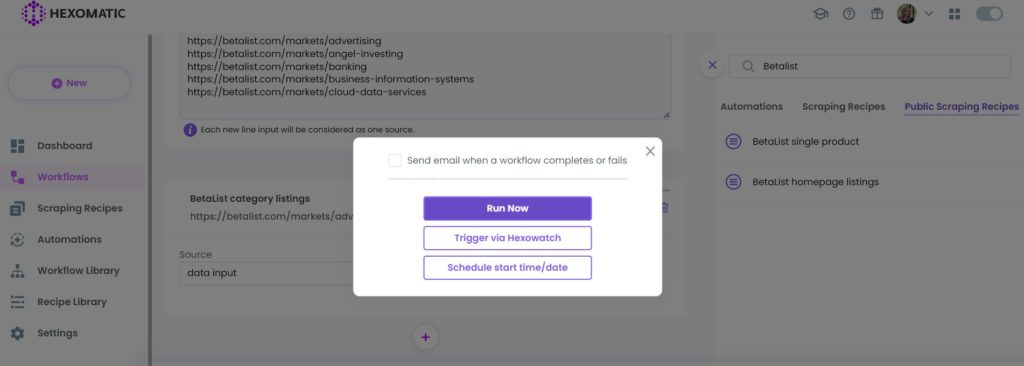

Step 5: Run the workflow

Run the workflow to get the results.

Step 6: View and Save the results

Once the workflow has finished running, you can view the results and export them to CSV or Google Sheets.

#3 How to scrape single product data from BetaList

Now, let’s see how you can scrape a single product data from BetaList automatically using our public scraping recipe.

Step 1: Go to the Library of Scraping Templates

From your dashboard, select Scraping Templates to access the public scraping recipes.

Step 2: Capture the product page URL(s)

Go to https://betalist.com/ and select the product(s) you want to scrape. You can select one page or a list of pages to scrape data automatically.

Step 3: Select the Betalist single product recipe

Select the Betalist single product recipe from the Recipe Library and click the “Use in a workflow” option.

Step 4: Add the captured URL(s) via data input

Once the workflow is created, add the Data Input automation. Then, add the previously captured page URL(s) using the Manual paste/ list of inputs option. You can add a single URL or bulk URLs.

Step 5: Run the workflow

Run your workflow to get the results.

Step 6: View and Save the results

Once the workflow has finished running, you can view the results and export them to CSV or Google Sheets.

Automate & scale time-consuming tasks like never before

Marketing Specialist | Content Writer

Experienced in SaaS content writing, helps customers to automate time-consuming tasks and solve complex scraping cases with step-by-step tutorials and in depth-articles.

Follow me on Linkedin for more SaaS content